What’s 🔥 in Enterprise IT/VC #420

Why we wrote our largest initial Inception check of $12.5M in Tessl to build AI Native Software Development - what this means for Inception/seed fund sizing?

It’s been quite a week as we hosted our investors and a number of founders for our AGM (annual general investor meeting) in Miami 🏝️. The core theme was the Future of Intelligent Automation, one which we started thinking about back in 2017. All of the shortcomings back in the day (see deck) are ready for primetime now. This is just a reminder for all of us that this really does take a long time! I want to thank all of our LPs who have supported boldstart through the years and for the many founders who came to present their vision for the agentic future - Clay, CrewAI, Kustomer, Noded, Waldo, and a number of other founders from unannounced stealth cos along with our F500 AI panelists from some of the largest banks in the world.

It was also a big week because this happened 👇🏼

Guy’s announcement here:

Yes, we are absolutely 🔥 up to partner from Inception with founder and friend Guy Podjarny for a 3rd time (Blaze sold to Akamai, Snyk - our initial why we invested post) as Tessl embarks on a mission to bring AI Native Software Development to the world. However, I do want to share that this was our largest Inception check ever, $12.5M out of our $192,168,111 fund vi (notice the Easter egg here). On the one hand there was no doubt that we wanted to lead/co-lead Guy’s initial round, but on the other hand, we were certainly sweating 😓 a bit as he told us folks were offering $50-100M sight unseen to help him get started.

Ultimately, we worked out a number for a sizable Jumbo Inception round of $25M co-leading with our friend Tom Hulme from GV who we got to know from the Snyk journey. Here are a few pictures from that weekend in Miami from earlier this year with Guy, my partner Eliot Durbin, and our trusty pals 🐶 (notice the boat name - I couldn’t resist 🤣).

As a fund manager, some may think we are nuts 😜. We obviously don’t think so, but yeah, it’s a huge initial check for a fund our size with a follow-on check which is just as big. However, we’ve been preparing for this moment for a long time. We’ve been experiencing increasing initial round sizes since we started boldstart in 2010, and that trend has only accelerated with AI. Increasingly we saw the multistage funds get bigger and bigger and point their howitzers at being first, for all the reasons I laid out here in What’s 🔥 #365 “What is Inception Investing and The Race to Be First.”

As we’ve evolved from a $1M fund to almost a $200M fund today, we had to answer one question - if the some of the best founders can now raise $6M, $10M, $20M or more, should we invest, and if so, how big would we need to be to still maintain ball control (lead/co-lead). Or should we just raise a smaller fund and decide not to lead/co-lead any rounds bigger than say $5-6M and let the multistage firms own that space. Ultimately, we decided that in order to fulfill our mission of Inception Investing in the best founders whether it be first timers or multitimers that we unequivocally must play ball in the Jumbo rounds to give our investors the opportunity to generate the best returns. If not, we would have already missed a chance, for example, to co-lead Protect AI’s initial $10.5M round in early 2022 on its way to building a leading AI security platform with a number of Global 200 customers and raising a Series B a few months ago at a $460M post money valuation.

So I’m glad we made that decision to invest across the whole Inception landscape. What it really boils down to at Inception is answering two questions - if right, how big and what could our return look like, and what gives this team the right to win. After having partnered with Guy through the life of boldstart from Fund I to Fund III to now Fund VI, it’s pretty clear that he is one of those special founders that we can’t miss (Snyk is a multibillion company), and we’re thrilled to join the journey again. Also a huge congrats to the Tessl team with the closing of an additional $100M Series A led by Index and including Accel and previous seed leads, boldstart and GV!

Here’s more on why we invested in Tessl and what AI Native Software development is.

As always, 🙏🏼 for reading and please share with your friends and colleagues.

Scaling Startups

#💯 the importance of a common vision from Steve Jobs - watch this, absorb it, and apply

#Wonder why some of these Inception rounds are so massive for AI startups? Salary inflation for even the median engineer at OpenAI is at $500K 🤯. Hiring Tier 1 talent requires a big mission and also real 💰

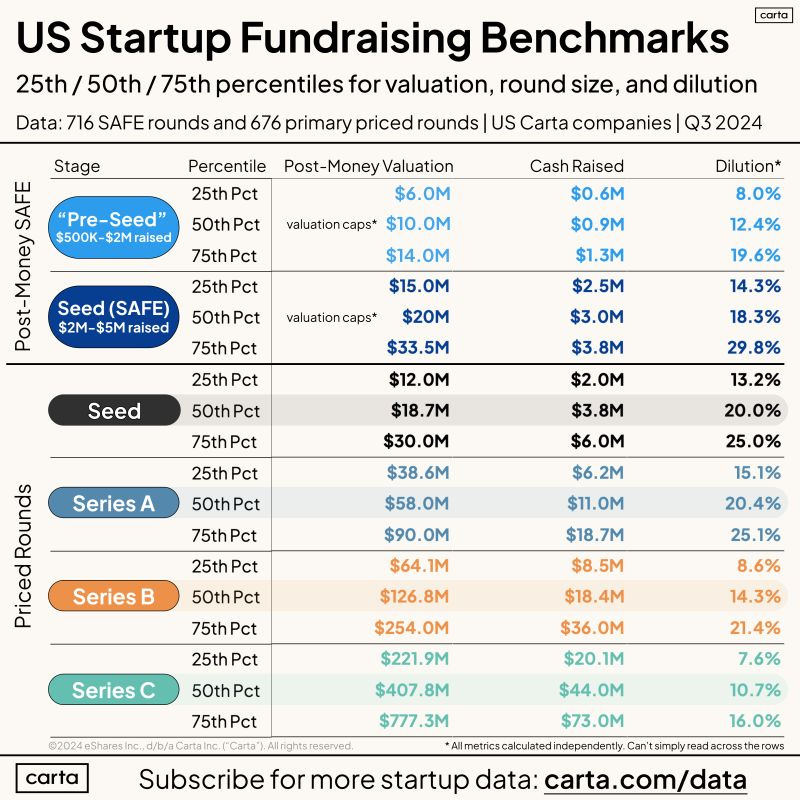

#current state of valuations as per Peter Walker - Carta - the more money raised the higher the valuation and dilution…

Enterprise Tech

#a16z’s new thesis on AI - “Intelligent Automation”

Just a reminder we’ve been down this road before…check out our boldstart CXO deck from 2018 - all of the issues we saw back then are ready for primetime today

and our F500 Intelligent Automation Dinner we hosted in 2019 discussing the limitations of RPA back then and that we need bots who can make decisions powered by AI - the time is now!

3. There is no intelligence in intelligent automation — can’t do exception handling, still need orchestration — next wave will be incorporating ML/AI to enable bots to make decisions

4. Many bots break on a daily basis, some of these corporations have automation centers of excellence fixing and others are working with outsourced providers like Wipro and others

5. Need to make it easier to deploy, install, and manage, still 50% of cost of RPA comes from services for an implementation

No right unit of measurement — # of bots, human FTEs, # of tasks to measure ROI

6. RPA is task oriented vs process oriented, bots do a good job of executing tasks but if errors, you can’t inject humans into a process and make it end-to-end#AI agent stack - really great overview from Charles Packer - Letta

#OpenAI Shifts Strategy as Rate of ‘GPT’ AI Improvements Slows (The Information) - just plain running out of high-quality training data and if true, this has huge implications

The Orion situation could test a core assumption of the AI field, known as scaling laws: that LLMs would continue to improve at the same pace as long as they had more data to learn from and additional computing power to facilitate that training process.

In response to the recent challenge to training-based scaling laws posed by slowing GPT improvements, the industry appears to be shifting its effort to improving models after their initial training, potentially yielding a different type of scaling law.

Orion was trained in part on AI-generated data, produced by other OpenAI models, including GPT-4 and recently released reasoning models, according to an OpenAI employee. However, such synthetic data, as it is known, is leading to a new problem in which Orion may end up resembling those older models in certain aspects, the employee said.#this is a massive deal - agents with virtual credit cards to actually make purchase decisions and also cool to see Stripe use portfolio co CrewAI as one of the integrations!

Agentic workflows need not have exclusively virtual outcomes. Imagine a travel agent that can book flights for your company. Using LLMs and function calling we can assemble a set of agents that can search for flights online, return options, and ultimately identify a booking URL. With Stripe, you can embed financial services and enable the automation of the purchase flow as well.

Using Stripe Issuing, you can generate single-use virtual cards that agents can use for business purchases. This enables your agents to spend funds. The Issuing APIs allow you to approve or decline authorizations programmatically, ensuring your purchase intent matches the authorization. Spending controls allow you to set budgets and limit spending for your agents.#Andrew Ng on the evolution of LLMs from being “optimized to answer peoples’ questions to agentic workflows, giving a huge boost to agentic performance!” (

As agentic workflows mature, here is what I am seeing:

First, many developers are prompting LLMs to carry out the agentic behaviors they want. This allows for quick, rich exploration!

In a much smaller number of cases, developers who are working on very valuable applications will fine-tune LLMs to carry out particular agentic functions more reliably. For example, even though many LLMs support function calling natively, they do so by taking as input a description of the functions available and then (hopefully) generating output tokens to request the right function call. For mission-critical applications where generating the right function call is important, fine-tuning a model for your application’s specific function calls significantly increases reliability. (But please avoid premature optimization! Today I still see too many teams fine-tuning when they should probably spend more time on prompting before they resort to this.)

Finally, when a capability such as tool use or computer use appears valuable to many developers, major LLM providers are building these capabilities directly into their models. Even though OpenAI o1-preview’s advanced reasoning helps consumers, I expect that it will be even more useful for agentic reasoning and planning.#more on AI scaling laws from Ilya, formerly OpenAI - has it really plateaued?

#queuing this up for the weekend - read 🧵 also - covers some of AI scaling law questions and what does AGI look like and when 🤔

#speaking of AI scaling laws and the need for more data, we have to remember that there is so much dark data that models don’t see sitting behind enterprise firewalls. Pretty cool and no surprise that F500s like Bayer are now using that data to create small industry specific models (WSJ)

Bayer is known for selling seeds. Now it sells seeds and artificial intelligence. Microsoft today announced it is working with the German pharmaceutical-and-agricultural group and other companies on specialized AI models fine-tuned on industry-specific data.

The companies can now list and monetize those models on Microsoft’s online model catalog. For Bayer that means an AI model fine-tuned with its data and designed to provide answers on agronomy and crop protection is available to be licensed by its distributors, new AgTech startups, and even potentially competitors. The model can answer questions about ingredients in an insecticide or whether a product could be applied to cotton, for example.

Microsoft expects this new approach, built on its Phi family of small language models and preloaded with industry knowledge, will accelerate enterprise generative AI adoption, a yearslong effort built on the understanding that off-the-shelf AI models often don’t cut it for business needs.

Companies now find it critical to augment today’s general models with more industry-specific or business-specific data if they’re going to be useful.#👀Anysphere, creators of AI coding assistant Cursor, rumored to be raising at $2.5B valuation with all of the big firms chasing - revenue apparently went from $4M per year to $4M per month 🤯 (TechCrunch)

There isn’t a shortage of AI-powered coding assistance startups. They include Augment, Codeium, Magic, and Poolside.

However, Cursor has become one of the most popular. Its developer, Anysphere, has seen its revenue grow from $4 million annualized recurring revenue (ARR) in April to $4 million a month as of last month, according to a person with direct knowledge of the company’s financials. The company is experiencing faster user adoption and growth compared to other coding assistant providers, another person said.

Such fast growth has VCs flocking to it. Anysphere has received unsolicited offers valuing the company at as much as $2.5 billion from Benchmark, Index Ventures, and previous investors Andreessen Horowitz and Thrive, among others, the person said.#Writer AI raises $200M at a $1.9B valuation (TechCrunch) with a big vision (see post from CEO) calling it the Future of Enterprise Work - Building an autonomous AI platform for the enterprise - all roads lead to Glean, Perplexity, Writer battling it out for AI platform 💰

In the coming year, you’ll see Writer double down on end-to-end automation around our customers’ mission-critical workflows including:

Better tool use and workflows: If AI is going to become the connective tissue of the enterprise it needs to connect to everything. To do this we’re building powerful workflow tools that allow businesses to codify their business logic, and create AI blueprints of their mission-critical workflows.

More observability and control: You’ll also see us ship better tools for monitoring AI across your business, evaluating its impact, and controlling what it can and can’t do.

Improved reasoning: We’re going to build deeper, more efficient, models with larger context windows that can reason through a complex series of actions. This includes more domain-specific models that, just like our Healthcare and Finance models, will be top-ranked, highly efficient, and available directly from the Writer platform.#great way to see what’s trending in Silicon Valley with YC latest request for startups

Markets

#Multiples - outside of Palantir at 44x, Top 10 is mostly 12-14x NTM with highest forecasted growth at 25% - also if you notice these companies outside of OneStream all have pretty sizable market caps which means scale matters - OneStream is at $7B, Guidewire at $16B, and then next lowest is Samsara at $27B