What’s 🔥 in Enterprise IT/VC #384

Where does value accrue in the AI stack? We're all still learning!

Another week has gone by, and yet another LLM was released surpassing existing industry benchmarks - this time it was Claude from Anthropic.

These are pretty 🤯 numbers. Opus is for Claude Pro users, Sonnet remains free, and Haiku is the “fastest and most cost-effective model on the market for its intelligence category. For the vast majority of workloads, Sonnet is 2x faster than Claude 2 and Claude 2.1, while Opus is about the same speed as past models.”

As I’ve written in the past, the infrastructure layer is moving so fast that first mover is not necessarily an advantage. In addition, many founders that are building now are thinking about how they can swap today’s OpenAI model, for example, for what may be better in the future - just wait, OpenAI’s next model is supposed to be even better.

With Anthropic’s most recent release, there has been lots of discussion around where value accrues in the AI stack - LLMs, infra, apps.

Marc Benioff from Salesforce chimes in here

AI's true gold isn't in the UI or model—they're both commodities. What breathes life into AI is the data & metadata that describes the data to the model—just like oxygen for us. The future's fortune lies in our data. Yes, Data is the new gold! 💖

I also suggest checking out this 🧵from Jason Warner, ex-CTO of Github, and co-founder/CEO of PoolsideAI, building a new foundational model for code along with apps on top, it’s CoPilot…

Have a long flight so time to do a thread on a question I get in some form at least half dozen times a week

Where will value accrue in AI from here

Think: foundation models, apps, middleware, full-stack blah blah blah etc

I really like Jason’s thinking around full stack foundation models which includes the LLM and the apps on top:

Which leads to the 2nd foundation model category #1b Full stack foundation model companies These are companies that are building a frontier foundation model but it's part of their offering I put poolside here fwiw, we are building a foundation model, a platform, and apps on it

We have a *specific* use case we care about. It allows us to be more focused and makes clear who would care about integrating with us. Also makes clear how to evaluate performance etc 1a and 1b together make up the entirety of the frontier AI companies building foundation models

Note: I really really really would think all 1a companies end up trying to move into 1b companies at some point unless they have a benevolent benefactor for life. And even there MSFT hedged their OpenAI bet a few times already

If one can build a domain specific LLM with access to data that others cannot get with the idea of building focused apps on top of your own specific model like code or robotics, then one will have the ability to create significant value. This model is not for the faint of heart as training LLMs costs significant 💰 and Poolside, for example, has already raised a $126M seed round 😲.

Next up is the infrastructure layer which includes all of the tools that infra folks and developers will use to build these applications and deploy them to the real world. This includes your traditional cloud vendors like Microsoft and AWS Bedrock who are best positioned to win here, 37 AI observability tools we’ve already seen, frameworks to build and deploy agents, 27+ vector databases...you get the point. There will be some huge winners here for sure as incumbents like MongoDB may find it hard to get the developer traction needed for this new category, but man, I’ve seen mountains of 💰poured into this space that it’s hard to tell who will win. In addition, when you have the foundational model companies like OpenAI offering its own version of fine tuning in a simple framework, one has to wonder what else every other LLM provider will offer to make it so easy for devs to call an API and build and deploy. Buyer beware in this category.

Last but not least we have the product folks, yes the products themselves, and I’m not talking about thin wrappers and beautiful demos, but the products which just blow people away.

Here are my thoughts which, btw, I’m sure will change again in the next few months as this market is moving so fast!

First thing we need to remember is that AI is a technology and not a product. Zuck explains it well from Meta’s most recent earnings call transcript:

I know that some people have questions about how we benefit from open sourcing the results of our research and large amounts of compute, so I thought it might be useful to lay out the strategic benefits here. The short version is that open sourcing improves our models, and because there's still significant work to turn our models into products, because there will be other open source models available anyway, we find that there are mostly advantages to being the open source leader and it doesn't remove differentiation from our products much anyway…

And again, we typically have unique data and build unique product integrations anyway, so providing infrastructure like Llama as open source doesn't reduce our main advantages. This is why our long-standing strategy has been to open source general infrastructure and why I expect it to continue to be the right approach for us going forward

So where in the stack is less important for me vs. thinking about products and the user and how the initial experience leads to a LT advantage.

Whether LLM, infra or app, show me a product that feels magical 🪄, that feels 10x better to the end user whether a dev tool, infra monitoring, cybersecurity or app, and you have a chance. Incremental won’t do - and yeah that magical experience is likely powered by a data moat but also involves the basics - UI, UX, data, etc.

This magic 🪄 btw can’t be offered by thin wrappers as it requires a deep understanding of the user so likely trained on custom data perhaps using RAG or some other fine-tuned system and powered by proprietary, domain specific data. So I subscribe to Benioff’s camp about the data but also do believe the UI also is extremely important.

Some examples include Perplexity in search (not enterprise but illustrates my point) - it is blazing fast and works, Superhuman - simplest is often the most powerful use cases of AI (see video from What’s 🔥 #383 last week and think about the amount of email data it is sitting on to create personalized responses (also proud to be first investor), LangChain and CrewAI also have amazing developer experiences. With respect to Crew, it’s a super easy and powerful AI agent framework (video of how easy to build a crew of financial analysts) that’s attracting a ton of attention.

What's the cybersecurity equivalent? The Datadog equivalent?

There are so many amazing, talented founders starting new companies, and IMO there is no better time to start a company and "Inception Invest" but let’s change the conversation from a macro one of where value accrues in the AI stack to the micro and first principles thinking!

What problem are you solving, for who, and how are you doing it uniquely to make an end user’s life 10x better with your product than without?

Answer the “why now” - what can exist today that previously could not because of these new LLMs?

What is your data acquisition strategy + advantage?

How will you outmaneuver incumbents and how will your product be built to last?

On the latter, there has also been a ton of debate.

Incumbents have the data and distribution advantage, and Microsoft is crushing it but look at Google. Did you see that Gemini launch - what a disaster! Not every incumbent is like Microsoft - some like Google can’t get out of their own way when launching one of most important products in recent history.

There are no absolutes!

If the Internet felt like 🐶 years, then AI feels like 😽 years (first 2 human years = 24 cat years).

It’s time to compete, to have some fun, and to deliver some magical experiences that aren’t just demos but go much deeper. It’s about solving the problem for the end user and making that experience magical. There will be lots of losers but some insanely big winners as well. Don’t think stack first, think user first.

The good news is that the universal principles of success always matter - founders, team and execution always win, and this is no different in 2024 wherever you may roam on the AI stack. Like the Metallica song remember this:

Oh, but I'll take my time anywhere

Free to speak my mind anywhere

And I'll redefine anywhere

Anywhere I roam

Where I lay my head is home, yeah

Own it!

As always, 🙏🏼 for reading and please share with your friends and colleagues.

Scaling Startups

JJ from OSS Capital crushed it with this video clip on Why Nvidia wins - yes, it’s about Jensen and what a true founder mindset is - must watch!

How to launch and crush your Product Hunt launch Lenny Rachitsky

Enterprise Tech

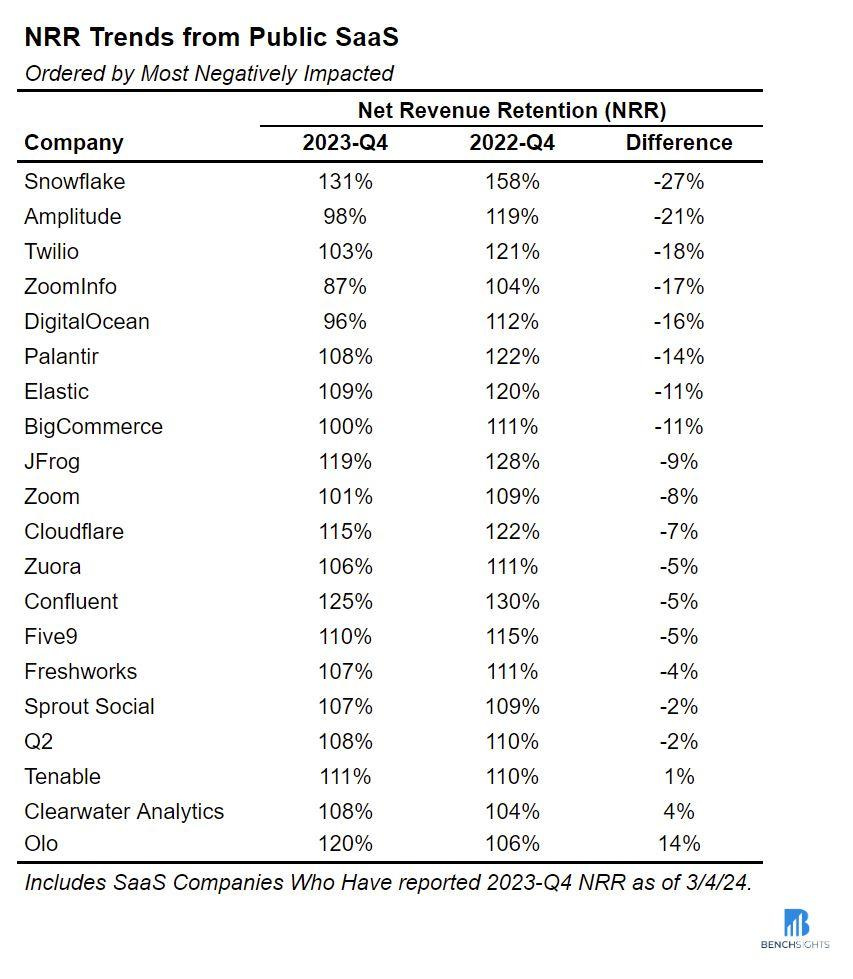

Best in class Net Dollar Retention (NDR) revisited - here’s data from David Spitz 👇🏼 - I do believe we are at the bottom for best in class now in 115% range, down from 130% (see What’s 🔥 #381 for a deeper dive into this) along with SaaSletter from Matt Harney for more

Former $NVDA employee sharing his thoughts on the current GPU "craze" (AlphaSense)

- He believes inference is becoming increasingly important as people have almost finished the trial-and-error period to instrument the network and train it. Inference will face big challenges with large memory requirements.

- The memory problem is being addressed from a few angles: quantization, knowledge distillation, and looking for alternatives to the transformer-based architecture such as Mamba. But it's all still in the early or academic stages.

- The prices of renting GPUs will fall by a factor of 10x in the future, with some alternatives coming, like AM 0.00%↑ D's MI300X.How much value does Microsoft Copilot really add to its users? From TechStockFundamentals - excerpt below but there’s a lot more in the 🧵:

MS TMT: $MSFT Head of Office 365 / Dynamics 365 / other divisions did good job explaining long-term opportunity for AI Co-Pilot while keeping expectations measured near-term.

Highlights

-O365 has 400m users and believe AI helps address additional 2b employees who don’t sit at desks (handheld devices they use need to be secure, communications via Teams, audio communication w/ Copilots)

-E5 SKU is only 12% penetrated; biggest driver for upgrade is additional security

-Priced Co-pilot as add-on to make it accessible to everyone. Typical worker is 30% more productive w/ best users saving 10 hours a month.

-Rolling out role based co-pilots – finance, sales, etc. for another $20 / month premium

-Co-pilot is great but not dramatically changing standard sales cycle of evaluation, training, purchase negotiation – have to work with customers to show them the behaviors that create the most value

-Believe Co-pilot will be heart of the office product over time. Focused on making it so compelling that 100% of users buy it. Goal is help people identify the drudgery in each of their jobs and take it over.

Probably one of those things where everyone overestimates functionality / adoption in one year but underestimates what happens over 3 years.Another early exit for an Israeli cybersecurity company - Flow in DSPM space rumored to have been bought by Crowdstrike for $200M after having raised $13M. This follows Digg in DSPM space being bought by Palo Alto Networks for a rumored $400M and IBM buying Polar Security for rumored $60M. Cyera in DSPM space is also raising a large round at the moment.

Get your 🍿 as this will get super interesting with the incumbents going after the startups who are reaching early escape velocity. I placed my bet many years ago on BigID ( a portfolio co) which is much more comprehensive than just an acronym, DSPM, and offers full platform for data security, privacy, compliance, and governance: enabling organizations to proactively discover, manage, protect, and get more value from their data in a single platform for data visibility and control.So Cloudflare released its own AI Firewall this week and IMO this is just table stakes in AI Security (Palo Alto Networks also working on one), everyone will have an AI Firewall, way too many startups still getting funded here as well, bigger question is what's next? How does one drive true MLSecOps with this as one component of larger picture? More here from Dark Reading…

No infrastructure co is immune - even with amazing community growth giving away too much for free is not a strategy, and eventually one must get financially fit. Planetscale, one of the hottest cloud scale DBs around, announced some important changes this past week - the free Hobby plan is over as it won’t pay the bills. In addition, it buried a RIF, mostly in sales and marketing which may seem larger than indicated in the post. Remember, prematurely scaling esp. in Sales and Marketing can kill a startup, even ones that have raised >$100M

PlanetScale is committed to providing a reliable and sustainable platform for our customers, not just in the short-term, but forever. For this reason, we are prioritizing profitability…

As of today, PlanetScale can project profitability after the following key decisions: to part ways with members of our team (primarily Sales and Marketing) and sunsetting our Hobby plan. I would like to express my extreme gratitude for the people we are parting ways with today.

Our Hobby plan will be retired on April 8th, 2024.

Do we need Prompt Engineering? Here’s data from a recent study saying automatically generated prompts by each model than by using people (IEEE)

Battle and Gollapudi decided to systematically test how different prompt-engineering strategies impact an LLM’s ability to solve grade-school math questions. They tested three different open-source language models with 60 different prompt combinations each. What they found was a surprising lack of consistency. Even chain-of-thought prompting sometimes helped and other times hurt performance. “The only real trend may be no trend,” they write. “What’s best for any given model, dataset, and prompting strategy is likely to be specific to the particular combination at hand.”

According to one research team, no human should manually optimize prompts ever again.

There is an alternative to the trial-and-error-style prompt engineering that yielded such inconsistent results: Ask the language model to devise its own optimal prompt. Recently, new tools have been developed to automate this process. Given a few examples and a quantitative success metric, these tools will iteratively find the optimal phrase to feed into the LLM. Battle and his collaborators found that in almost every case, this automatically generated prompt did better than the best prompt found through trial-and-error. And, the process was much faster, a couple of hours rather than several days of searching.What does the end of ZIRP mean for common software engineering practices?From Gergely Orosz

Markets

🤯 Databricks! AI is Driving Record Sales at Multibillion-Dollar Databricks. An IPO Can Wait …(WSJ)

‘The markets seem pretty shut,’ says CEO Ali Ghodsi as the company announces $1.6 billion in revenue for its fiscal year, a 50% leap from the previous period

Like many tech firms, Databricks is cashing in on the AI bonanza by offering generative AI systems tailored to each business. “Every CEO, every CIO, wants to have generative AI as part of their strategy,” Ghodsi said. “That leads to them being willing to commit and do bigger deals with us.”

Following Palo Alto Networks brutal earnings call a few weeks ago where it lowered guidance for the next year, Zscaler came out and did the opposite - hit it out of the park - read my 🧵

Love it when the 🦍 are pounding their chest going after one another Here's $CRWD @GeorgeKurtz 💪🏼 on true platforms in cybersecurity vs. bundling, cough cough from $PANW...

George Kurtz - Co-Founder, President, and Chief Executive OfficerWell, thanks, Brian. As you might imagine, I heard a lot about platformization over the last week. To me, it's kind of a made-up fugazi term. But what I believe our competitors are talking about is bundling, discounting, and giving products away for free, which is nothing new in software and security software.

It's been done for the last 30 years. So, when we think about what we've seen in the past with other competitors, we know free isn't free. And what customers are saying is more consoles, more point products masquerading as platforms create fatigue in their environment. And one of the things, again, that we've been focused on is that single-agent architecture, single platform,single console that allows us to stop the breach, but more importantly, drive down the operational cost and supply many use cases - or solve many use cases that are out there.

So, l've been around the block for a bit. I've seen this movie of wrap-and-roll and bundling together with multiple products that were acquired. And, you know, last time I saw that I was at McAfee. So, I don't know, Burt, you have anything to add to that?

Burt Podbere - Chief Financial Officer

Yeah. I think, George, look, at the end of the day, we sell on value. And we've never been a company that's done deep discounting. We've never been a company that has increased our prices to get more ARR.

That's not us. And at the end of the day for us, you know, we're here to help customers get the greatest amount of outcomes at the lowest TCO. Period. And to George's point, free is not free, and good is not good enough.

I'm a founder of a generative AI company and I agree with Benioff - the real power of an LLM comes from the data and the metadata used to describe that data - in fact, LLM's are really, really good at deriving metadata from unstructured or semistructured text. Once you have rich metadata, a world of opportunities opens up for developing platforms and apps, because rich metadata allows you to write code that generalizes well. When you can derive semantic meaning of a message, then the individual schema of a message doesn't matter anymore - you can write code that doesn't depend on specific structure because you can classify the message into a specific set of semantics using an LLM. This is in fact what my company is doing in the observability space (if you're interested in learning more, respond to this and I'll DM you on LinkedIn - my profile on Substack is detached from my business one on LI).

I'll add that the AI interface discussion is an interesting one. We get asked all the time why we don't have a co-pilot or chat like interface in our product. We may add this one day, but right now we've found that those interfaces, while certainly cool, add a whole slew of complexity. We know how to interrogate our LLM so that it performs the broad set of specific tasks we need it to reliably and with minimal hallucination. The minute you start allowing users to prompt your model, you've got all kinds or complexity around prompt engineering, RAG, etc just to nudge the model response in certain directions. I'm not sure this is ultimately what uses are going to want - maybe for some use cases like search or summarization. But for enterprise users, they may very well want the AI to be "hidden" in the sense that the models are just under the hood, doing their thing quietly while making employees work days more efficient.

This is a really good comment and certainly what I see mostly in the market. Changing to prompts from clicks makes everything more complicated.