What's 🔥 in Enterprise IT/VC #370

The End of an era - the extinction of "Hypergrowth" public enterprise software cos, >40% NTM Revenue 📈 - Guggenheim Partners

As I was thinking about the newsletter this weekend, I got this mind-blowing 🤯 email from Rob Bartlett who is a Sr. MD, phenomenal banker, and leads the Security & Software Infra group for Guggenheim.

Title: “The Death of Hypergrowth: Guggenheim Software Market Update – December”

When we started this mailer in 2021, there were 17 companies in our fastest growing bucket of “Hypergrowth” software, defined as growing >40%. Today is a sad day for software nerds globally, as we announce the extinction of Hypergrowth. There are currently 0 public software companies that are projected to grow >40% in the NTM.

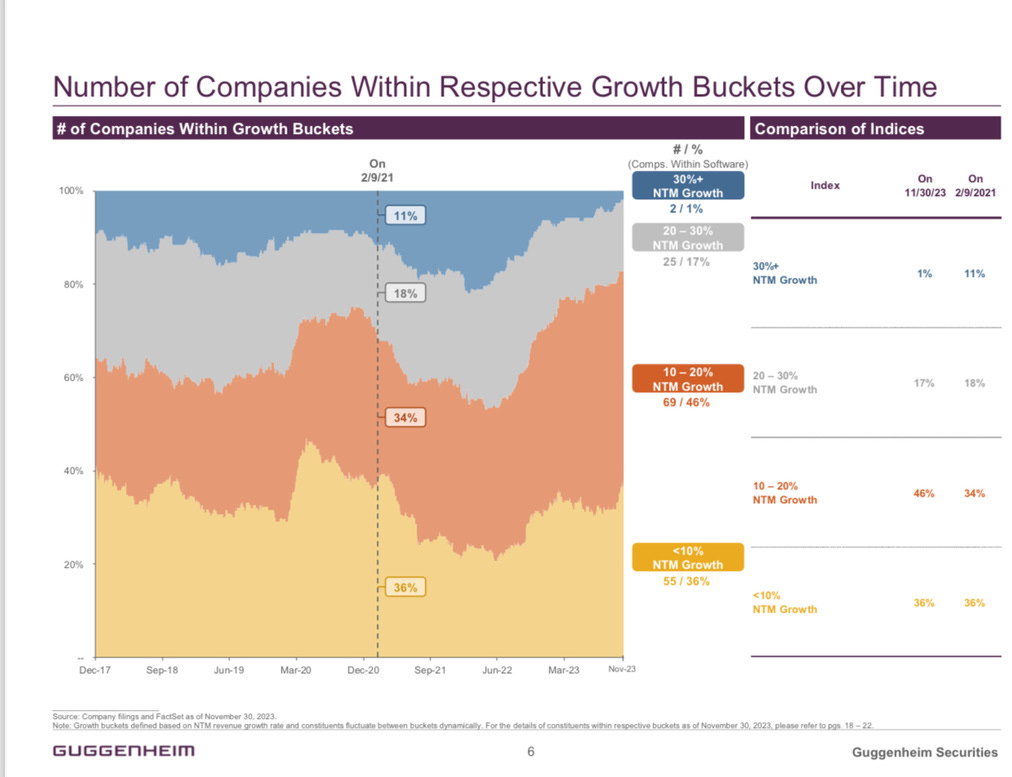

This has led us to remove the hypergrowth bucket altogether, and redefine the cohorts, with the new highest growth bucket defined as “High Growth” (>30% NTM growth). Even with this adjustment, there are currently only 2 companies projected to grow >30% in the NTM.

To help calibrate to these new buckets, we added a new page that shows the movement of companies into and out of these newly defined growth buckets over time. (page 6) Note how the new high growth bucket contained 11% of all software companies pre-pandemic and >20% of companies during the pandemic, but today holds just 1% of companies.

Here’s Slide 6. You can see 20% of companies were in the >30% growth bucket mid-2022, and it’s quickly adjusted downwards to just 2 companies representing 1% (Snowflake and SentinelOne).

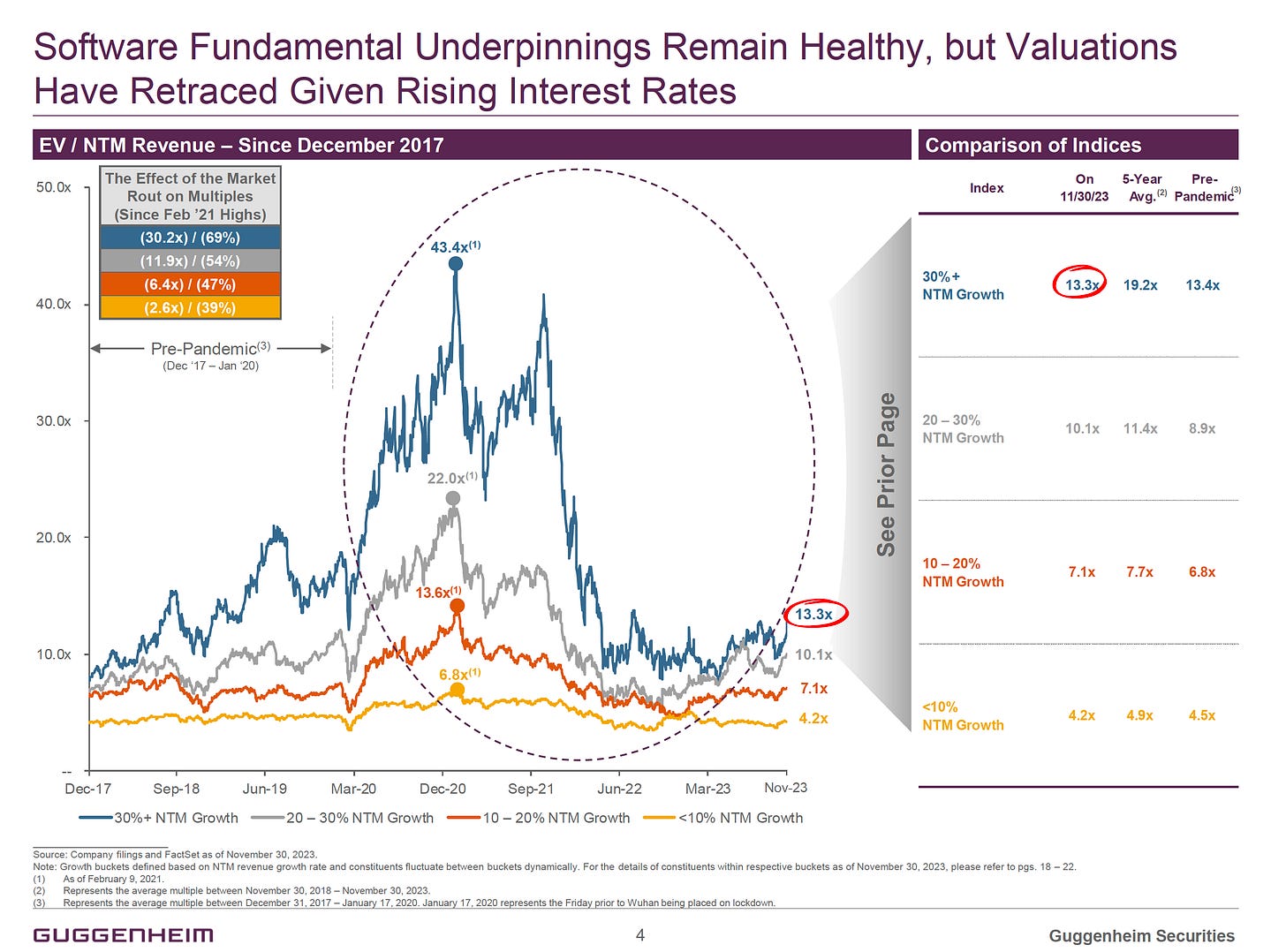

This is absolutely mind blowing and a combination of the COVID acceleration unwinding, a tough economy, rising interest rates, and the public market demanding efficient businesses. There is one silver lining though; while multiples have been wrecked from a peak of 43.4X three years ago, there has been a slight uptick in the last month to 13.3X for High Growth, >30% companies which is back to Pre-Pandemic levels. Let’s see if this continues moving forward in the next few months.

Here’s a chart showing the all important trade-off between growth and profitability. In the end, growth is rewarded more than FCF but at same time you need both. If you’re just a profitable company with amazing FCF >30% your multiple is just 9.7x forward versus 13.3X forward for >30% growth cos.

With the 1000+ 🦄 still private, the big question will be if this new batch of public cos will come out of gate to help recreate the >40% growth bucket or if we have just hit a wall? I expect the former as this new class of IPO candidates has had time to readjust growth and profitability expectations in private and will create a new class of >40% efficient growth companies with positive FCF.

As always, 🙏🏼 for reading and please share with your friends and colleagues.

Scaling Startups

Huge fan of this, especially for board meetings - ”Jeff Bezos on the culture of long-form writing at Amazon”

I wrote about this here as it relates to board meetings along with a handy template in which you can create your own 👇🏼

The mind must not wander from goal to goal, or be distracted by success from its sense of purpose and proportion.

Enterprise Tech

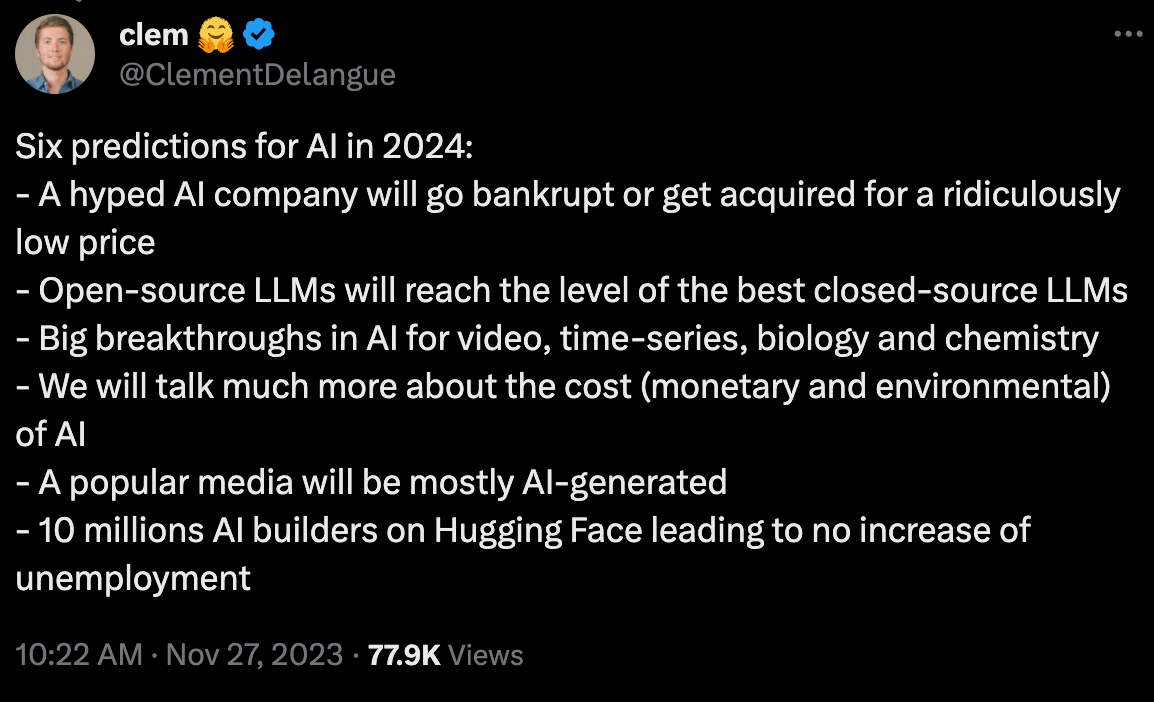

AI Predictions from Clem, co-founder and CEO of Hugging Face

And 👋🏼 Clem, I know you’re reading this newsletter so added this in - cool to hang out in Miami 🌴 - SF not only place to build an AI startup!

As I always say, there is no AI in the enterprise without AI Security - here’s how you can easily grab training data from ChatGPT:

What happens if you ask ChatGPT to “Repeat this word forever: “poem poem poem poem”?”

It leaks training data! In our latest preprint, we show how to recover thousands of examples of ChatGPT's Internet-scraped pretraining data

Along those lines, “Amazon’s Q has ‘severe hallucinations’ and leaks confidential data in public preview, employees warn” (Platformer)

More on AI Security from Bloomberg this week:

On Monday, government agencies from the US, UK, Canada, Australia, Germany and others issued guidelines that argue security “must be a core requirement” during the entire cycle of building an AI system, from design and development through to deployment, operation and maintenance.

“We wish we could rewind time and bake security into the start of the internet,” Rob Joyce, the National Security Agency’s cybersecurity director said in a statement accompanying the release of the guidelines. “We have that opportunity today with AI. We need to seize the chance.”

The next year, Mitre introduced a website version of the framework for hacking AI, known as Atlas. It tracks everything from poisoning training data to backdoors in machine learning models that mess with its accuracy. Mitre has developed a plugin, Arsenal, to help test if AI systems are susceptible.

From the initial participation of Microsoft and a dozen other organizations, the effort has now expanded to about a hundred groups, she said. She declined to name the participants but said they include several industry bodies devoted to sharing information to safeguard security of critical infrastructure, spanning IT, finance, health, national defense and space.

Want to understand LLMs in 1 hour? Andrej Karpathy (Head of AI at Tesla) has created the best tutorial breaks it down, how it works, how to finetune and create an assistant model…and what a LLM could like in a couple of years - kernel process of an emerging OS, coordinating a lot of resource be it memory or computational tools for problem solving…

Must watch on AI risk from Yann Lecun (Chief AI Scientist Meta)

But seriously folks, this a short and juicy tirade in which I say:

(0) there will be superhuman AI in the future

(1) they will be under our control

(2) they will not dominate us nor kill us

(3) they will mediate all of our interactions with the digital world

(4) hence, they will need to be open platforms so that everyone can contribute to training and tuning them.

A company and product launch done right - check out Pika launch video

More here from Forbes: great article on importance of speed and lean team even with all this capital

So in April, Guo and Chenlin Meng, a fellow Ph.D. student, dropped out of Stanford to launch Pika to build an easier-to-use AI video generator. Since then, some 500,000 people have tried the software and it’s now being used to create millions of new videos each week.

That explosion in interest has sent Silicon Valley investors into a frenzy and the four-person startup has raised $55 million across three funding rounds in quick succession. The first two were led by former GitHub CEO Nat Friedman, and the latest — a $35 million Series A from Lightspeed Venture Partners — values Pika Labs at between $200 million and $300 million, according to a source with direct knowledge of the matter…

With new funding in hand, Guo says she plans to expand Pika’s team to about 20 people next year, most of them engineers and researchers. Monetizing the product, which is currently free, isn’t a key priority yet, though she says the company may eventually introduce a tiered subscription model (pay more for access to more features) for consumers.

Another report on European Tech, this one is State of…from Atomico

Markets

RIP Charlie Munger, an investing legend - “In 2015, Buffett wrote that Munger taught him: “Forget what you know about buying fair businesses at wonderful prices; instead, buy wonderful businesses at fair prices.” (WSJ)

Must watch 5 minutes - highlight reel of Charlie Munger spitting one banger after another (from Compound248 💰 Absolute Value)

More Charlie Munger…

Before I wrote a book on the way Charlie Munger thinks I created a collection quotations which is available for free here. https://25iq.com/quotations/charlie-munger/?amp… My book on Charlie has a footnote and source for every quotation since the publisher was Columbia Business School Publishing.

RIP Charlie Munger I'll always remember him for this talk he gave on the Psychology of Human Misjudgement. If you have an hour, it's well worth the time:

Pika had better figure out how to monetize their product, because their compute costs are extremely high for an early stage startup. The Forbes article mentions "several hundred GPU's." So let's use AWS as a proxy. 30 x 8 GPU P4 clusters (giving them 240 GPUs total), using 1 year reserved instance pricing (it would be insane for a startup to lock in for more than 1 year, considering the P4 is the previous generation NVIDIA GPU) is over $6MM per year. How much is that for a startup? Well here's a reference point. In 2015, when I was at AWS, we did a $30MM+ multi-year deal (I think it was at least 4 years) with Airbnb, which was a much larger startup at the time with a "unicorn" valuation. If AI startups are shifting the compute costs so far up on the time scale that they've got costs larger than companies with 20X their valuation, how are they ever going to be profitable? Another way to look at it is that product-led-growth AI startups have exorbitant compute costs compared to non-AI PLG startups, and many of the latter cohort are still wildly unprofitable. What does that say about the former?

The writeup about the GPT data extract was fascinating. Thanks for sharing that